Google has scrambled to remove third-party apps that led users to child porn sharing groups on WhatsApp in the wake of TechCrunch’s report about the problem last week. We contacted Google with the name of one these apps and evidence that it and others offered links to WhatsApp groups for sharing child exploitation imagery. Following publication of our article, Google removed that app and at least five like it from the Google Play store. Several of these apps had over 100,000 downloads, and they’re still functional on devices that already downloaded them.

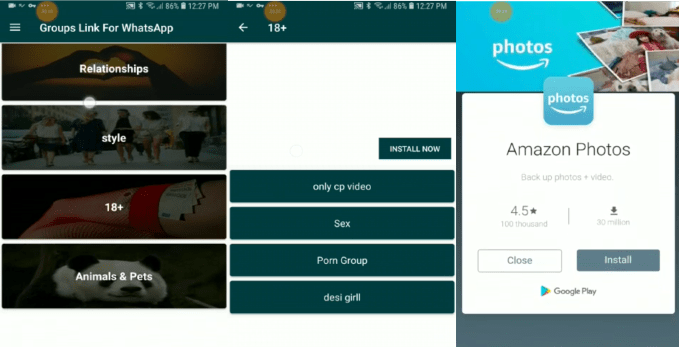

A screenshot from today of active child exploitation groups on WhatsApp. Phone numbers and photos redacted

WhatsApp failed to adequately police its platform, confirming to TechCrunch that it’s only moderated by its own 300 employees and not Facebook’s 20,000 dedicated security and moderation staffers. It’s clear that scalable and efficient artificial intelligence systems are not up to the task of protecting the 1.5 billion user WhatsApp community, and companies like Facebook must invest more in unscalable human investigators.

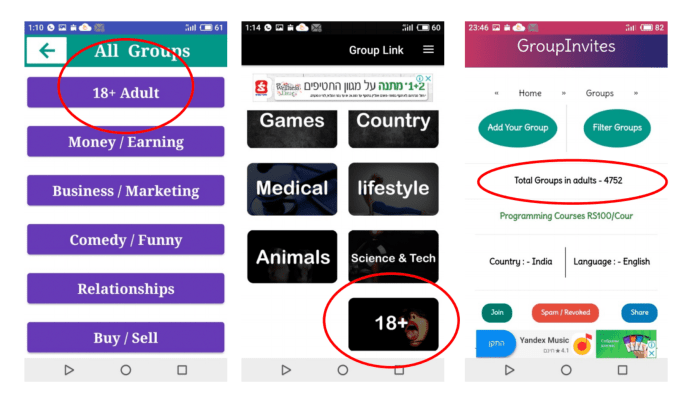

But now, new research provided exclusively to TechCrunch by anti-harassment algorithm startup AntiToxin shows that these removed apps that hosted links to child porn sharing rings on WhatsApp were supported with ads run by Google and Facebook’s ad networks. AntiToxin found 6 of these apps ran Google AdMob, 1 ran Google Firebase, 2 ran Facebook Audience Network, and 1 ran StartApp. These ad networks earned a cut of brands’ marketing spend while allowing the apps to monetize and sustain their operations by hosting ads for Amazon, Microsoft, Motorola, Sprint, Sprite, Western Union, Dyson, DJI, Gett, Yandex Music, Q Link Wireless, Tik Tok, and more.

The situation reveals that tech giants aren’t just failing to spot offensive content in their own apps, but also in third-party apps that host their ads and that earn them money. While these apps like “Group Links For Whats” by Lisa Studio let people discover benign links to WhatsApp groups for sharing legal content and discussing topics like business or sports, TechCrunch found they also hosted links with titles such as “child porn only no adv” and “child porn xvideos” that led to WhatsApp groups with names like “Children

” or “videos cp” — a known abbreviation for ‘child pornography’.

” or “videos cp” — a known abbreviation for ‘child pornography’.

In a video provided by AntiToxin seen below, the app “Group Links For Whats by Lisa Studio” that ran Google AdMob is shown displaying an interstitial ad for Q Link Wireless before providing WhatsApp group search results for “child”. A group described as “Child nude FBI POLICE” is surfaced, and when the invite link is clicked, it opens within WhatsApp to a group called “Children

”. (No illegal imagery is shown in this video or article. TechCrunch has omitted the end of the video that showed a URL for an illegal group and the phone numbers of its members.)

”. (No illegal imagery is shown in this video or article. TechCrunch has omitted the end of the video that showed a URL for an illegal group and the phone numbers of its members.)

Another video shows the app “Group Link For whatsapp by Video Status Zone” that ran Google AdMob and Facebook Audience Network displaying a link to a WhatsApp group described as “only cp video”. When tapped, the app first surfaces an interstitial ad for Amazon Photos before revealing a button for opening the group within WhatsApp. These videos show how alarmingly easy it was for people to find illegal content sharing groups on WhatsApp, even without WhatsApp’s help.

Zero Tolerance Doesn’t Mean Zero Illegal Content

In response, a Google spokesperson tells me that these group discovery apps violated its content policies and it’s continuing to look for more like them to ban. When they’re identified and removed from Google Play, it also suspends their access to its ad networks. However, it refused to disclose how much money these apps earned and whether it would refund the advertisers. The company provided this statement:

“Google has a zero tolerance approach to child sexual abuse material and we’ve invested in technology, teams and partnerships with groups like the National Center for Missing and Exploited Children, to tackle this issue for more than two decades. If we identify an app promoting this kind of material that our systems haven’t already blocked, we report it to the relevant authorities and remove it from our platform. These policies apply to apps listed in the Play store as well as apps that use Google’s advertising services.”

| App | Developer | Ad Network | Estimated Installs | Last Day Ranked |

| Unlimited Whats Groups Without Limit Group links | Jack Rehan | Google AdMob | 200,000 | 12/18/2018 |

| Unlimited Group Links for Whatsapp | NirmalaAppzTech | Google AdMob | 127,000 | 12/18/2018 |

| Group Invite For Whatsapp | Villainsbrain | Google Firebase | 126,000 | 12/18/2018 |

| Public Group for WhatsApp | Bit-Build | Google AdMob, Facebook Audience Network | 86,000 | 12/18/2018 |

| Group links for Whats – Find Friends for Whats | Lisa Studio | Google AdMob | 54,000 | 12/19/2018 |

| Unlimited Group Links for Whatsapp 2019 | Natalie Pack | Google AdMob | 3,000 | 12/20/2018 |

| Group Link For whatsapp | Video Status Zone | Google AdMob, Facebook Audience Network | 97,000 | 11/13/2018 |

| Group Links For Whatsapp – Free Joining | Developers.pk | StartAppSDK | 29,000 | 12/5/2018 |

Facebook meanwhile blamed Google Play, saying the apps’ eligibility for its Facebook Audience Network ads was tied to their availability on Google Play and that the apps were removed from FAN when booted from the Android app store. The company was more forthcoming, telling TechCrunch it will refund advertisers whose promotions appeared on these abhorrent apps. It’s also pulling Audience Network from all apps that let users discover WhatsApp Groups.

A Facebook spokesperson tells TechCrunch that “Audience Network monetization eligibility is closely tied to app store (in this case Google) review. We removed [Public Group for WhatsApp by Bit-Build] when Google did – it is not currently monetizing on Audience Network. Our policies are on our website and out of abundance of caution we’re ensuring Audience Network does not support any group invite link apps. This app earned very little revenue (less than $500), which we are refunding to all impacted advertisers.”

Facebook also provided this statement about WhatsApp’s stance on illegal imagery sharing groups and third-party apps for finding them:

“WhatsApp does not provide a search function for people or groups – nor does WhatsApp encourage publication of invite links to private groups. WhatsApp regularly engages with Google and Apple to enforce their terms of service on apps that attempt to encourage abuse on WhatsApp. Following the reports earlier this week, WhatsApp asked Google to remove all known group link sharing apps. When apps are removed from Google Play store, they are also removed from Audience Network.”

An app with links for discovering illegal WhatsApp Groups runs an ad for Amazon Photos

Israeli NGOs Netivei Reshet and Screen Savers worked with AntiToxin to provide a report published by TechCrunch about the wide extent of child exploitation imagery they found on WhatsApp. Facebook and WhatsApp are still waiting on the groups to work with Israeli police to provide their full research so WhatsApp can delete illegal groups they discovered and terminate user accounts that joined them.

AntiToxin develops technologies for protecting online networks harassment, bullying, shaming, predatory behavior and sexually explicit activity. It was co-founded by Zohar Levkovitz who sold Amobee to SingTel for $400M, and Ron Porat who was the CEO of ad-blocker Shine. [Disclosure: The company also employs Roi Carthy, who contributed to TechCrunch from 2007 to 2012.] “Online toxicity is at unprecedented levels, at unprecedented scale, with unprecedented risks for children, which is why completely new thinking has to be applied to technology solutions that help parents keep their children safe” Levkovitz tells me. The company is pushing Apple to remove WhatsApp from the App Store until the problems are fixed, citing how Apple temporarily suspended Tumblr due to child pornography.

Ad Networks Must Be Monitored

Encryption has proven an impediment to WhatsApp preventing the spread of child exploitation imagery. WhatsApp can’t see what is shared inside of group chats. Instead it has to rely on the few pieces of public and unencrypted data such as group names and profile photos plus their members’ profile photos, looking for suspicious names or illegal images. The company matches those images to a PhotoDNA database of known child exploitation photos to administer bans, and has human moderators investigate if seemingly illegal images aren’t already on file. It then reports its findings to law enforcement and the National Center For Missing And Exploited Children. Strong encryption is important for protecting privacy and political dissent, but also thwarts some detection of illegal content and thereby necessitates more manual moderation.

With just 300 total employees and only a subset working on security or content moderation, WhatsApp seems understaffed to manage such a large user base. It’s tried to depend on AI to safeguard its community. However, that technology can’t yet perform the nuanced investigations necessary to combat exploitation. WhatsApp runs semi-independently of Facebook, but could hire more moderators to investigate group discovery apps that lead to child pornography if Facebook allocated more resources to its acquisition.

WhatsApp group discovery apps featured Adult sections that contained links to child exploitation imagery groups

Google and Facebook, with their vast headcounts and profit margins, are neglecting to properly police who hosts their ad networks. The companies have sought to earn extra revenue by powering ads on other apps, yet failed to assume the necessary responsibility to ensure those apps aren’t facilitating crimes. Stricter examinations of in-app content should be administered before an app is accepted to app stores or ad networks, and periodically once they’re running. And when automated systems can’t be deployed, as can be the case with policing third-party apps, human staffers should be assigned despite the cost.

It’s becoming increasingly clear that social networks and ad networks that profit off of other people’s content can’t be low-maintenance cash cows. Companies should invest ample money and labor into safeguarding any property they run or monetize even if it makes the opportunities less lucrative. The strip-mining of the internet without regard for consequences must end.

from Google – TechCrunch https://tcrn.ch/2T8FvB2

Comments

Post a Comment